In an article in The Conversation on a recent report issued by the Oxford Internet Institute, researcher Hanna Bailey wrote: “Donald Trump’s removal from social media platforms has reignited the debate around the censorship of information published online, but the issue of disinformation and manipulation on social media goes far beyond one man’s Twitter account. And it is much more widespread than previously thought.”

Bailey is part of an Oxford Internet Institute team that has been monitoring the global proliferation of social media manipulation campaigns – defined as the use of digital tools to influence online public behaviour – over the past four years.

The latest media manipulation survey from the OII, authored by Philip N.Howard, Hanna Bailey and Samantha Bradshaw, explores the ways government agencies and political parties have used social media to spread political propaganda, pollute the digital information ecosystem and suppress freedoms of speech and press.

Professor Philip Howard , Director of the OII, says:

“Our report shows misinformation has become more professionalised and is now produced on an industrial scale. Now more than ever, the public needs to be able to rely on trustworthy information about government policy and activity. Social media companies need to raise their game by increasing their efforts to flag misinformation and close fake accounts without the need for government intervention, so the public has access to high-quality information.”

The report found a clear trajectory in professionalized, industrialised misinformation production by mainstream communications and public relations firms. It reveals that “while social media can enhance the scale, scope, and precision of disinformation, many of the issues at the heart of computational propaganda — polarisation, distrust and the decline of democracy — have pre-dated social media and even the Internet itself”.

In Hanna Bailey’s opinion, despite the Cambridge Analytica scandal exposing how private firms can meddle in democratic elections, the research found an alarming increase in the use of “disinformation-for-hire” services across the world:

“Using government and political party funding, private-sector cyber troops are increasingly being hired to spread manipulated messages online, or to drown out other voices on social media:”

3 key trends in 81 countries

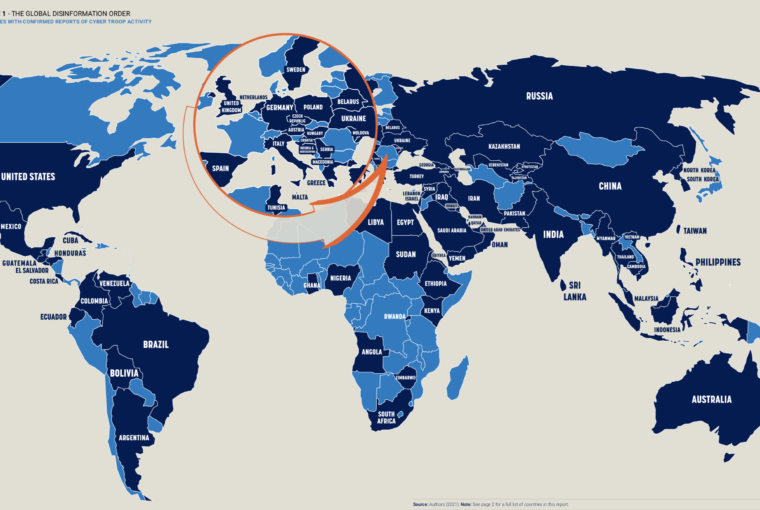

OII’s report describes the tools, capacities, strategies, and resources used to manipulate public opinion in 2020. It identifies the recent trends of computational propaganda across 81 countries:

“1. Cyber troop activity continues to increase around the world. In 2020 the authors found evidence of 81 countries using social media to spread computational propaganda and disinformation about politics. This has increased from last years’ report, in which 70 countries with cyber troop activity were identified.

2.Over the last year, social media firms have taken important steps to combat the misuse of their platforms by cyber troops. Public announcements by Facebook and Twitter between January 2019 and November 2020 reveal that more than 317,000 accounts and pages have been removed by the platforms. Nonetheless, almost US $10 million has still been spent on political advertisements by cyber troops operating around the world.

3. Private firms increasingly provide manipulation campaigns. The 2020 report found firms operating in 48 countries, deploying computational propaganda on behalf of a political actor. Since 2018 there have been more than 65 firms offering computational propaganda as a service. In total, we have found almost US $60 million was spent on hiring these firms since 2009.”

The long list of countries – 81 – in which cyber troop activity was found shows indicates that they are everywhere.

The actors leveraging social media to shape public opinion, set political agendas and propagate ideas

According to the survey, government agencies are increasingly using computational propaganda to direct public opinion. The researchers found evidence of government agencies using computational propaganda to shape public attitudes in 62 countries:

” This category of actors includes communication or digital ministries, military campaigns, or police force activity. This year, we also included counts for state-funded media under our government agency banner, as some states used their state-funded media organisations as a tool to spread computational propaganda both domestically and abroad”.

Belarus is mentioned as an example of state-funded media, with the government controlling more than six hundred news outlets, many of which show evidence of propaganda and manipulation.

Evidence of Political Parties or politicians running for office using the tools and techniques of computational propaganda as part of their political campaigns was found in 61 countries by the researchers:

“Indeed, social media has become a critical component of digital campaigning, and some political actors have used the reach and ubiquity of these platforms to spread disinformation, suppress political participation, and undermine oppositional parties”.

The document highlights Tunisia, saying that Facebook pages without direct links to candidates amplified disinformation and polarising content in the lead-up to the vote. And Michael Bloomberg, a candidate in the US Democratic Party’s Presidential Primary, used fake Twitter accounts for his campaign, hiring hundreds of operators to artificially amplify support, according to the report.

The study also mentions the role of private firms as a source of computational propaganda, based on evidence from platform take-downs of coordinated inauthentic behaviour, as well as ongoing journalistic investigations that have helped identify a growing number of political communication firms involved in spreading disinformation for profit.

There are also Civil Society Organisations, named as an important feature of the manipulation campaigns. The research found out that they often work in conjunction with cyber troops, including internet subcultures, youth groups, hacker collectives, fringe movements, social media influencers and volunteers who ideologically support a cause.

“The distinction between these groups can often be difficult to draw, especially since activities can be implicitly and explicitly sanctioned by the state. In this report, we look for evidence of formal coordination or activities that are officially sanctioned by the state or by a political party, rather than campaigns that might be implicitly sanctioned because of factors such as overlapping ideologies or goals. We found twenty-three countries that worked in conjunction with civil society groups and fifty-one countries that worked with influencers to spread computational propaganda”.

Indonesia’s “buzzer groups” who would volunteer to work with political campaigns during the 2019 elections are mentioned as an example of this method.

According to one of the authors, Samantha Bradshaw, cyber troop activity can look different in democracies compared to authoritarian regimes:

“Electoral authorities need to consider the broader ecosystem of disinformation and computational propaganda, including private firms and paid influencers, who are increasingly prominent actors in this space.”

Account Types

The research found evidence of automated accounts being used in 57 countries, such as bots set up by various public institutions in Honduras, including the National Television Station.

It can be both real and fake, as in the United States, where the researchers found out that teenagers were enlisted by a pro-Trump youth group, Turning Point Action, to spread pro-Trump narratives, as well as disinformation about topics such as mail-in ballots or the impact of the coronavirus.

Another type of real-human account, less common, includes hacked, stolen or impersonation accounts (groups, pages, or channels), which are then co-opted to spread computational propaganda.

Strategies and Tactics

The researchers have categorised the activity of cyber troops into categories:

Creation of disinformation or manipulated media. This includes creative so-called “fake news” websites, doctored memes, images or videos, or other forms of deceptive content online. This is the most prominent type of communication strategy, found in 76 countries. OII found few examples of “deep fake” technology being used for political deception. Doctored images and videos appears as still the most important form of manipulated media.

An example mentioned was the manipulated video of the Argentinian Minister of Security Patricia Bullrich before the 2029 elections, edited to make her appear intoxicated

Data-driven strategies to profile and target specific segments of the population with political advertisements.An example: during the 2019 General Election in the UK, First Draft News identified that 90% of the Conservative Party’s Facebook advertisements in the early days of December 2019 promoted claims labelled as misleading by Full Fact.

In 30 countries instances of data-driven strategies were identified, in many cases facilitated by private firms who would use social media platforms’ advertising infrastructure to target advertisements towards both domestic and foreign audiences”.

Trolling, doxing or online harassment – In 59 countries evidence of trolls being used to attack political opponents, activists, or journalists on social media were found. Although often thought of as being constituted by networks of young adults and students, these teams can be comprised of a wide range of individuals.

Cyber troop activity in Tajikistan, where the Ministry of Education and Science assigns trolling activities to teachers and university professors who will initiate coordinated campaigns to discredit opponents, is an example.

Mass-reporting of content or accounts – Posts by activists, political dissidents or journalists can be reported by a coordinated network of cyber troop accounts in order to game the automated systems social media companies use to flag, demote, or take down inappropriate content were found in seven countries. In seven countries evidence of the mass-reporting of content and accounts was found.

One example cited is the human networks of cyber troops in Pakistan, who both artificially boost political campaigns, but also mass report tweets that oppose their agenda as spam, causing the Twitter algorithm to block that issue’s access to the trending panel. Recently Twitter has maintained a 0% compliance rate with government requests to take down content that would fall under cyber troop activities, as reported by the company in 2029.

The document says that Facebook and Google have also been a focus of cyber troops in Pakistan: on Facebook, Pakistan successfully restricted more than 5,700 posts between January and June 2019 and on Google, more than 3,299 posts were requested to be removed between January and June 2019, as reported by Google.

Messaging and valence

OII’s report revealed that cyber troops use a variety of messaging and valence – the attractiveness (goodness) or averseness (badness) of a message, event, or thing – strategies when communicating with users online, classified as:

2. Attacking the opposition or mounting smear campaigns – One example includes China-backed cyber troops who continue to use social media platforms to launch smear campaigns against Hong Kong protesters.

3. Suppressing participation through trolling or harassment – Cyber troops are increasingly adopting the vocabulary of harassment to silence political dissent and freedom of the press. One example from 2019-2020 includes the Guatemalan “net centres” which use fake accounts that label individuals as “terrorists or foreign invaders” and target journalists with vocabulary associated with war, such as “enemies of the country”.

4. Populist political parties use social media narratives that drive division and polarize citizens – A recent example includes troll farms in Nigeria, with suspected connections to the Internet Research Agency in Russia. These troll farms are spreading disinformation and conspiracies around social issues in order to polarize online discourse, within Nigeria and as part of Russia’s foreign influence operations targeting the United States and the United Kingdom.

Big business

The way these groups operate vary from country to country. In some teams emerge temporarily around elections or to shape public attitudes around other important political events. In others, cyber troops are integrated into the media and communication landscape with full-time staff working to control, censor, and shape conversations and information online.

It is the case of Venezuela. The report mentions leaked documents in 2018 describing how disinformation teams were organised following a military structure, where each person (or crew) could manage twenty-three accounts, and be part of a squad (ten people), company (fifty people) a battalion (one hundred people) or a brigade (five hundred people), which could operate as many as 11,500 accounts. People would “sign up for Twitter and Instagram accounts at government-sanctioned kiosks” and were rewarded with coupons for food and goods

According to OII, between January 2019 and November 2020, cyber troop actors have spent over US $10 million on Facebook advertisements, and governments have signed more than US $60 million worth of contracts with private firms.

The researchers developed a simplistic measure to comparatively assess the capacity of cyber troop teams in relation to one another, taking into consideration the number of government actors involved, the sophistication of tools, the number of campaigns, the size and permanency of teams, and budgets or expenditures made. They were divided into three groups.

- High capacity: Australia, China, Egypt, India, Iran, Iraq, Israel, Myanmar, Pakistan, Philippines, Russia, Saudi Arabia, Ukraine, United Arab Emirates, United Kingdom, United States, Venezuela and Vietnam.

- Medium capacity: Armenia, Austria, Azerbaijan, Bahrain, Belarus, Bolivia, Brazil, Cambodia, Cuba, Czech Republic, Eritrea, Ethiopia, Georgia, Guatemala, Hungary, Indonesia, Kazakhstan, Kenya, Kuwait, Lebanon, Libya, Malaysia, Malta, Mexico, Nigeria, North Korea, Poland, Rwanda, South Korea, Sri Lanka, Syria, Taiwan, Tajikistan, Thailand, Turkey and Yemen.

- Low capacity: Angola, Argentina, Bosnia & Herzegovina, Colombia, Costa Rica, Croatia, Ecuador, El Salvador, Germany, Ghana, Greece, Honduras, Italy, Kyrgyzstan, Moldova, Netherlands, Oman, Qatar, Republic of North Macedonia, Serbia, South Africa, Spain, Sudan, Sweden, Tunisia, Uzbekistan, and Zimbabwe.

Industrialised disinformation has become more professionalized, and produced on a large scale by major governments, political parties, and public relations firms, says OII’s report

The report by Oxford Internet Institute concludes that the co-option of social media technologies should cause concern for democracies around the world—but so should many of the long-standing challenges facing democratic societies.

“Both the Covid-19 pandemic and the US election forced many social media firms to better flag misinformation, close fake accounts, and raise standards for both information quality and civility in public conversation. Not everyone agrees that these initiatives are sufficient. It is also not clear whether these more aggressive responses by social media firms will be applied to other issue areas or countries”.

The document states that computational propaganda has become a mainstay in public life and that these techniques will also continue to evolve as new technologies — including Artificial Intelligence, Virtual Reality, or the Internet of Things — poised to fundamentally reshape society and politics. But reminds that computational propaganda does not exist or spread independently:

“It is the result of poor technology design choices, lax public policy oversight, inaction by the leadership of social media platforms, investments by authoritarian governments, political parties, and mainstream communications firms. Computational propaganda is also a driver of further democratic ills, including political polarization and diminished public trust in democratic institutions

“Social media platforms can be an important part of democratic institutions, which can be strengthened by high-quality information. A strong democracy requires access to this information, where citizens are able to come together to debate, discuss, deliberate, empathize, make concessions and work towards consensus. There is plenty of evidence that social media platforms can be used for these things. But in this annual inventory, we find significant evidence that in more countries than ever, social media platforms serve up disinformation at the behest of major governments, political parties and public relations firms”.

Social media moderation

In her The Conversation‘s article, Hanna Bailey wrote that debates around the censoring of Trump and his supporters on social media cover only one facet of the industry’s disinformation crisis:

“As more countries invest in campaigns that seek to actively mislead their citizens, social media firms are likely to face increased calls for moderation and regulation — and not just of Trump, his followers and related conspiracy theories like QAnon”.

OII’s report revealed that between January 2019 and December 2020, Facebook removed 10,893 accounts, 12,588 pages and 603 groups from its platform. In the same period, Twitter removed 294,096 accounts and continues to remove accounts linked to the far right.

However, she reminds, despite account removal the research has exposed that between January 2019 and December 2020 almost US$10 million was spent by cyber troops on political advertisements. And believes that a crucial part of the story is that social media companies continue to profit from the promotion of disinformation on their platforms, demanding action.

“Calls for tighter regulation and firmer policing are likely to follow Facebook and Twitter until they truly get to grips with the tendency of their platforms to host, spread and multiply disinformation.

She defends that these companies should increase their efforts to flag and remove disinformation, along with all cyber troop accounts which are used to spread harmful content online. Otherwise, the continued escalation in computational propaganda campaigns that the research has revealed will heighten political polarisation, diminish public trust in institutions, and further undermine democracy worldwide.